Most enterprise chatbot projects fail the same way.

They start with strong intent — reduce support load, surface internal knowledge faster, give teams a better self-service experience. They end with a bot that confidently answers the wrong questions, hallucinates policy details, and gets quietly abandoned six months after launch.

The problem isn’t the technology. It’s the architecture decision made at the beginning.

Standard LLM-powered chatbots generate responses from what the model learned during training. In an enterprise context, that’s almost never enough. Your organization’s knowledge — product documentation, HR policies, legal contracts, support runbooks, internal processes — isn’t in any training dataset. The model doesn’t know it. And when it doesn’t know something, it doesn’t say “I don’t know.” It makes something up that sounds correct.

That’s where Retrieval-Augmented Generation changes the equation entirely.

RAG-powered chatbots don’t rely on what the model memorized. They retrieve relevant information from your actual knowledge sources in real time, then generate a response grounded in that content. The result is an AI chatbot development service that answers from your documents, your data, and your current truth — not a statistical approximation of what the answer might be.

For enterprises serious about deploying AI that works reliably, RAG isn’t optional. It’s the foundational architecture decision. Here’s how to build it correctly.

What RAG Actually Is (And What It Isn’t)

Let’s simplify this before going deeper.

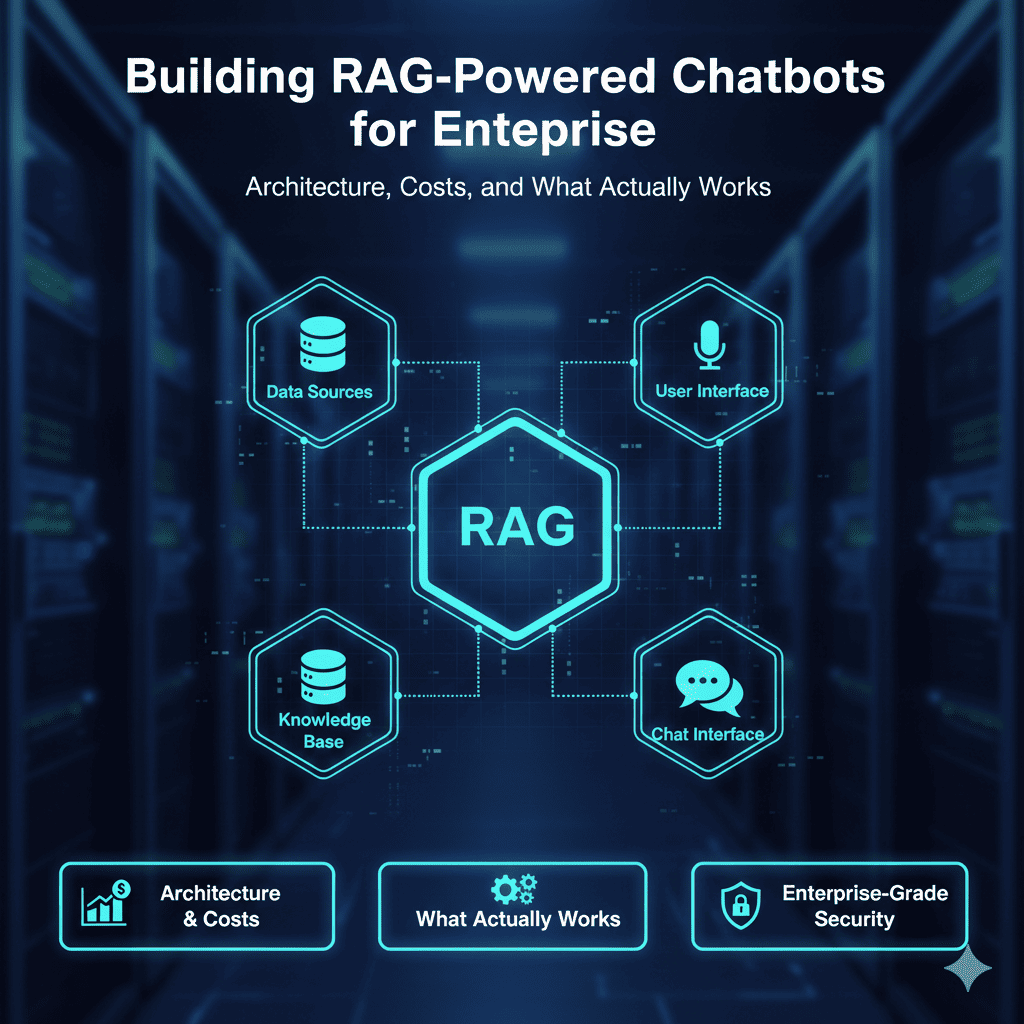

RAG stands for Retrieval-Augmented Generation. The name describes exactly what happens:

- A user asks a question

- The system retrieves the most relevant content from a defined knowledge base

- That retrieved content is passed to the LLM as context

- The LLM generates a response grounded in that context — not from training memory alone

Think of it like this: a standard LLM is a brilliant generalist who studied everything before walking into the room. A RAG-powered system is the same generalist, but now they have your company’s full document library open on the desk in front of them before answering.

The generalist is still doing the reasoning. The quality of what’s on the desk determines the quality of the answer.

What RAG is not:

It’s not fine-tuning. Fine-tuning updates the model’s weights with new training data — expensive, time-consuming, and still prone to hallucination. RAG is retrieved at inference time, which means your knowledge base can be updated without retraining anything.

It’s not just keyword search. RAG uses semantic search — embedding-based similarity matching — to find conceptually relevant content even when the user’s phrasing doesn’t match the document’s exact language.

It’s not plug-and-play. The quality of a RAG system depends on the quality of your data pipeline, chunking strategy, embedding model, retrieval logic, and prompt design. These decisions compound.

The Enterprise Case for RAG: Where It Delivers Real Value

Before designing the architecture, it’s worth being precise about where RAG-powered chatbots actually earn their investment in enterprise contexts.

Internal Knowledge Management

The average enterprise knowledge base is a graveyard. Confluence pages last updated in 2021, SharePoint folders no one can navigate, PDFs that contain the correct answer but can’t be found in time. RAG transforms static knowledge stores into queryable, conversational interfaces. Employees ask in plain language. The system retrieves the right document section and synthesizes a clear answer.

For organizations with large onboarding documentation loads, compliance policy libraries, or technical runbooks — the productivity impact compounds quickly. Gartner estimates that employees spend an average of 2–3 hours per day searching for information. A well-deployed RAG system meaningfully reduces that number.

Customer-Facing Support Automation

Unlike generic LLM chatbots that invent answers to product questions, RAG-powered support bots retrieve from your actual documentation — product specs, return policies, troubleshooting guides, API references. The answer is always grounded in what you’ve published.

For eCommerce, SaaS, and financial services companies, this means deflection rates that hold up under scrutiny because the bot is answering accurately, not confidently incorrectly.

Legal, Compliance, and Contract Intelligence

Legal and compliance teams spend significant time searching across contracts, regulatory guidance, and policy documents for specific clauses or requirements. A RAG system indexed against contract libraries, regulatory frameworks, and internal compliance documentation gives analysts a natural language interface to information retrieval that would otherwise take hours.

Sales and Revenue Enablement

Sales teams need fast access to competitive battlecards, pricing matrices, case studies, and product positioning — in context, during conversations. A RAG-powered internal tool that retrieves the right assets based on a deal description or prospect question compresses research time and improves response quality.

The Architecture of a Production-Grade RAG System

This is where implementation quality separates deployments that work from deployments that get abandoned.

A production RAG system has five core layers. Each layer has decisions that determine the system’s accuracy, speed, and maintainability.

Layer 1: Data Ingestion and Preprocessing

Before anything gets retrieved, your source documents need to be ingested, cleaned, and structured. This is the most underestimated phase of RAG implementation.

Source types a typical enterprise needs to handle:

- PDFs (structured and unstructured)

- Word and Google Docs

- Confluence and Notion pages

- SharePoint libraries

- Zendesk or ServiceNow knowledge bases

- Slack or Teams conversation archives

- Structured database content (product catalogs, pricing tables)

Each source type requires different parsing logic. PDFs with embedded tables need different treatment than a Confluence page with embedded images and links. Scanned PDFs require OCR preprocessing before text extraction. Documents with version histories need freshness logic — you don’t want the bot retrieving outdated policy documents.

The output of this layer is clean, structured text ready for chunking.

Layer 2: Chunking Strategy

Chunking is how you break documents into retrievable units. It sounds straightforward. It isn’t.

Chunk too large: retrieval pulls in too much irrelevant context, which dilutes the LLM’s focus and increases token costs. Chunk too small: you lose the surrounding context that makes an answer meaningful. A single sentence retrieved without its surrounding paragraph often can’t be answered correctly.

Common chunking approaches:

| Strategy | Best For | Trade-off |

|---|---|---|

| Fixed-size (token-based) | Simple docs, consistent formatting | Can split mid-concept |

| Sentence-level | Conversational content, FAQs | Loses multi-sentence context |

| Paragraph-level | Policy docs, runbooks | Variable chunk size |

| Semantic chunking | Complex mixed-format content | Higher compute cost |

| Hierarchical chunking | Long documents with clear sections | Best accuracy, more complex |

For most enterprise deployments, a hybrid approach — paragraph-level chunking with overlapping windows and hierarchical metadata tagging — outperforms any single strategy.

Overlapping windows matter because a key sentence might fall at the boundary of two chunks. With a 10–15% overlap, the relevant context appears in at least one retrievable unit regardless of where the boundary lands.

Layer 3: Embedding and Vector Storage

After chunking, each chunk is converted into a vector embedding — a numerical representation of its semantic meaning. These embeddings are stored in a vector database, which enables similarity search at retrieval time.

Embedding model selection affects retrieval quality significantly.

| Embedding Model | Strengths | Enterprise Fit |

|---|---|---|

| OpenAI text-embedding-3-large | High accuracy, well-supported | Strong for general enterprise |

| Cohere Embed v3 | Multilingual, retrieval-optimized | Good for global organizations |

| BGE-M3 (open source) | High performance, self-hostable | Strong for data-sensitive environments |

| Voyage AI | Domain-specific variants available | Legal, finance, medical use cases |

Vector database options:

| Platform | Best For |

|---|---|

| Pinecone | Managed, scalable, fast setup |

| Weaviate | Hybrid search (semantic + keyword), open source |

| Qdrant | High performance, self-hosted option |

| pgvector (PostgreSQL) | Teams already on Postgres, lower complexity |

| Azure AI Search | Microsoft-stack enterprises |

| Amazon OpenSearch | AWS-native environments |

Database selection should be driven by your existing infrastructure, data residency requirements, and scale expectations — not by what’s trending.

Layer 4: Retrieval Logic

When a user submits a query, the retrieval layer converts it to an embedding and finds the most semantically similar chunks in the vector database. This sounds simple. In practice, the retrieval logic design determines whether the system finds the right content or the approximately right content.

Pure vector search is a good starting point. But it misses exact-match requirements — product codes, specific clause numbers, named entities that don’t carry semantic weight in embedding space.

Hybrid search — combining vector similarity with BM25 keyword search — consistently outperforms either method alone for enterprise content. Most production systems in 2026 use hybrid retrieval as the baseline.

Reranking adds a second pass after initial retrieval. A cross-encoder reranking model (like Cohere Rerank or a fine-tuned BERT variant) re-scores the retrieved candidates for relevance before passing them to the LLM. This adds latency but meaningfully improves precision — especially for complex multi-concept queries.

Query decomposition handles multi-part questions by breaking the user’s query into sub-queries, retrieving separately, and synthesizing results. Essential for enterprise use cases where users ask compound questions.

Layer 5: Generation and Response Design

The retrieved chunks are assembled into a prompt context and passed to the LLM for response generation. The prompt design at this layer determines tone, citation behavior, fallback handling, and safety guardrails.

Key decisions here:

Citation and sourcing: Should the bot surface the source document and section? For compliance and legal use cases, this is non-negotiable. Users need to verify. For customer support, citations may be less relevant but trust-building.

Confidence thresholds and fallback logic: What happens when retrieval finds nothing above a similarity threshold? The bot should say “I don’t have information on that” — not fabricate an answer. This requires explicit fallback instruction in the system prompt.

Guardrails: What topics are out of scope? What happens if a user asks something the system shouldn’t answer? Guardrail layers (using tools like Guardrails AI, Llama Guard, or custom classification) prevent scope drift and reduce liability exposure.

LLM selection for generation:

| Model | Strengths | Enterprise Consideration |

|---|---|---|

| GPT-4o (OpenAI) | High reasoning, strong instruction following | Data privacy via API |

| Claude 3.5 Sonnet (Anthropic) | Long context, strong document synthesis | Strong for policy/legal content |

| Gemini 1.5 Pro (Google) | Long context window, multimodal | Google Workspace integration |

| Llama 3.1 (Meta, open source) | Self-hostable, no data egress | Air-gapped or high-security environments |

| Mistral Large | European data residency option | GDPR-sensitive deployments |

What Enterprise RAG Implementation Actually Costs

Let’s put real numbers on this.

Cost drivers: source system complexity, document volume, infrastructure model (cloud-managed vs. self-hosted), LLM API vs. open-source model, and internal vs. agency implementation.

Phase 1: Discovery, Architecture Design, and Data Audit

Before a line of code is written, someone needs to map your knowledge sources, assess data quality, define retrieval requirements, and design the system architecture. Shortcutting this phase is the primary reason RAG projects underperform.

Cost: $15,000 – $40,000 (agency-led) | 3–6 weeks

Phase 2: Data Pipeline and Ingestion Development

Building the connectors, parsers, chunking logic, and preprocessing pipeline for your specific source systems.

Cost: $20,000 – $60,000 depending on source system complexity | 4–8 weeks

Phase 3: Vector Database Setup and Embedding Pipeline

Infrastructure provisioning, embedding model integration, vector store configuration, and initial indexing of your knowledge base.

Cost: $10,000 – $30,000 | 2–4 weeks

Phase 4: Retrieval and Generation Layer Development

Building the retrieval logic, reranking integration, prompt engineering, guardrails, and generation pipeline.

Cost: $25,000 – $70,000 | 5–10 weeks

Phase 5: Frontend Interface and Integration

Building the chat interface, integrating with your existing platforms (Slack, Teams, Zendesk, internal portal), and API development for downstream system connections.

Cost: $15,000 – $45,000 | 3–6 weeks

Phase 6: Testing, Evaluation, and QA

RAG systems require systematic evaluation — not just user testing. Retrieval accuracy, answer faithfulness, hallucination rate, and latency benchmarks need to be measured against a defined evaluation dataset before production deployment.

Cost: $10,000 – $25,000 | 2–4 weeks

Total Implementation Cost Summary:

| Environment | Estimated Range |

|---|---|

| Internal knowledge bot (3–5 source systems) | $80,000 – $180,000 |

| Customer-facing support bot (mid-market) | $120,000 – $250,000 |

| Enterprise-grade multi-source deployment | $200,000 – $500,000+ |

Ongoing operational costs:

| Cost Item | Monthly Estimate |

|---|---|

| LLM API usage (GPT-4o, Claude) | $2,000 – $15,000/month |

| Vector database hosting | $500 – $5,000/month |

| Embedding pipeline compute | $300 – $2,000/month |

| Monitoring and evaluation tooling | $500 – $2,500/month |

| Maintenance and content refresh | $5,000 – $15,000/month |

The content refresh cost is the one enterprises consistently underestimate. Your knowledge base changes. Policies update. Products evolve. Processes change. The RAG system needs a defined update cadence — documents re-ingested, chunks refreshed, indexes rebuilt. Without this, retrieval quality degrades as your knowledge base drifts from what’s indexed.

Evaluation: How to Know If Your RAG System Is Actually Working

Deploying a RAG chatbot without a formal evaluation framework is like launching an eCommerce store without conversion tracking. You don’t know what’s working, what isn’t, or what to improve.

The four metrics that matter most:

Retrieval Recall: Are the right documents being retrieved for a given query? Measure this by testing known question-document pairs and calculating the percentage of cases where the correct source appears in the top-k retrieved results.

Answer Faithfulness: Is the generated response grounded in the retrieved content, or is the LLM drifting into hallucination? Tools like RAGAS (an open-source RAG evaluation framework) automate this measurement.

Answer Relevance: Is the response actually answering what was asked, or is it topically adjacent but not useful?

Latency: What is the end-to-end response time? Enterprise users have low tolerance for slow responses. A retrieval + reranking + generation pipeline running over 5 seconds will see adoption drop.

Build an evaluation dataset of 100–200 representative query-answer pairs before launch. Run formal evaluations weekly post-launch. Treat RAG quality like software quality — it needs continuous testing, not a one-time pass/fail.

What Separates Enterprise RAG Deployments That Work From Those That Don’t

After looking at what makes these implementations succeed or fail, a clear pattern emerges.

Data quality is the ceiling on system quality. If your source documents are inconsistently formatted, duplicated, outdated, or poorly structured, no retrieval strategy compensates for that. The document quality audit isn’t a pre-implementation checkbox — it’s a core determinant of outcome.

Chunking and retrieval deserve more engineering attention than the LLM selection. Most teams spend disproportionate time debating which model to use and underinvest in retrieval pipeline design. The LLM is the last step. If retrieval fails, the model has nothing good to work with.

User feedback loops are essential. Build thumbs-up/thumbs-down feedback into the interface from day one. Every negative signal is a retrieval or generation failure that needs diagnosis. Without feedback collection, you’re operating blind.

Access control is non-negotiable for enterprise. If your RAG system indexes documents from across the organization, it must respect document-level permissions. A junior employee querying the bot should not receive content from restricted executive or legal documents. This requires metadata-filtered retrieval tied to user identity — and it needs to be designed in from the beginning, not retrofitted later.

Conclusion

RAG-powered AI chatbots development services represent the practical path forward for enterprises that need AI to work reliably with their own knowledge — not approximate it.

The architecture isn’t simple. The data pipeline requires real engineering discipline. The evaluation framework demands ongoing attention. And the implementation cost reflects the genuine complexity of building something that performs accurately at enterprise scale.

But when it’s built correctly — with clean data, thoughtful chunking, hybrid retrieval, and a proper evaluation loop — the ROI compounds. Support ticket deflection improves. Knowledge retrieval time drops. Onboarding accelerates. Legal and compliance teams move faster. Sales teams respond with better information.

The technology isn’t the risk. Underinvesting in the architecture and data quality is.

Build the foundation correctly. Run formal evaluations before launch. Design feedback loops in from day one. And treat the knowledge base as a living system that needs continuous maintenance — because the moment it stops being updated, the system starts degrading.

Done right, a RAG-powered enterprise chatbot isn’t just a productivity tool. It’s institutional knowledge — made queryable.

FAQ

What is RAG and how does it differ from a standard LLM chatbot?

RAG (Retrieval-Augmented Generation) retrieves relevant content from a defined knowledge base at the time of each query and grounds the LLM’s response in that retrieved content. A standard LLM chatbot generates responses from training memory alone, which cannot include your organization’s proprietary documentation or current operational knowledge.

How long does it take to build an enterprise RAG chatbot?

A production-grade deployment typically takes 18–36 weeks end-to-end, including discovery, data pipeline development, retrieval architecture, interface development, and pre-launch evaluation. Smaller internal knowledge bots with fewer source systems can be deployed in 12–18 weeks.

What is the most common reason enterprise RAG projects fail?

Poor data quality and inadequate retrieval architecture design. Teams frequently underinvest in document preprocessing, chunking strategy, and retrieval pipeline engineering — and overinvest in LLM selection debates. The model is the last step; if retrieval doesn’t surface the right content, model quality doesn’t matter.

What vector database should an enterprise use for RAG?

The right choice depends on your infrastructure context. Pinecone is strong for managed, scalable deployments. Weaviate offers hybrid search capabilities suited for mixed enterprise content. pgvector is a practical option for teams already operating on PostgreSQL. Data residency and security requirements should drive the final decision more than benchmarks alone.

How do you ensure a RAG chatbot doesn’t access restricted documents?

Through metadata-filtered retrieval tied to user identity and access control systems. Every document chunk in the vector store should carry metadata reflecting its access tier. Retrieval queries must include user-permission filters that prevent restricted content from surfacing for unauthorized users. This architecture must be designed in from the start — it cannot be added as an afterthought.

What are the ongoing costs of running an enterprise RAG system?

Monthly operational costs typically range from $8,000 to $35,000 depending on LLM API usage volume, vector database scale, and maintenance cadence. Content refresh — re-ingesting updated documents and rebuilding indexes — is the most frequently underestimated ongoing cost.

How do you measure whether a RAG system is performing well?

Through four core metrics: retrieval recall (are the right documents being found?), answer faithfulness (is the response grounded in retrieved content?), answer relevance (does the response address what was asked?), and latency (how fast is the end-to-end response?). Open-source evaluation frameworks like RAGAS automate much of this measurement against a defined test dataset.